Distributed Computing

What is it?

A distributed system is a system whose components are spread across many machines on a single network.

- this network can be anything from a local network to something like a VPC (Virtual Private Cloud), in cases where we want geographic distribution.

A client-server architecture might be thought of as the simplest implementation of a distributed system.

In distributed computing, a problem is divided into many tasks, each of which is solved by one or more computers, which communicate with each other via message passing.

Data partition and replication strategies lie at the core of any distributed system.

- Replication - Keeping a copy of the same data on several different nodes, potentially in different locations.

- provides redundancy and improves performance (giving us more nodes to read from).

- Partitioning - Splitting a big database into smaller subsets (partitions) so each can be assigned to different nodes

- ex. sharding.

A distributed system is not to be confused with decentralized architecture.

- Distributed means that the processing is shared across multiple nodes, but the decisions may still be centralized and use complete system knowledge.

Distributed systems typically have the following characteristics:

- concurrency of components

- lack of a global clock

- failure of components

From a Single computer to a distributed network

With a single computer, when the hardware is working correctly, the same operation always produces the same result (it is deterministic). If there is a hardware problem, the result is normally a total system failure. Alas, an individual computer with properly functional software is either fully functional or entirely broken (this is deliberate, so as to simplify troubleshooting).

In a distributed system, some computers may be broken in some unpredictable way while others are working fine. This is known as partial failure, and they add considerable difficulty because they are a class of failure that is nondeterministic.

- sometimes we may not even know if something failed or succeeded, since the time it takes for a message to travel across a network is also nondeterministic. If you send a request and don’t get a response, it’s not possible to distinguish whether (a) the request was lost, (b) the remote node is down, or (c) the response was lost.

- this can be summed up as "problems in the network cannot reliably be distinguished from problems at a node".

- this nondeterminism and possibility of partial failure is what adds difficulty to distributed systems.

Building a distributed system is about building a reliable system on top of unreliable components.

Consensus

The difficulty involved in reaching consensus amongst all areas of a distributed system is one of the most important and fundamental problems of architecting a distributed system.

The consensus problem is normally formalized as follows: one or more nodes may propose values, and the consensus algorithm decides on one of those values. Everyone decides on the same outcome, and once you have decided, you cannot change your mind.

- ex. In an example of seat-booking of an airplane, when several customers are concurrently trying to buy the last seat, each node handling a customer request may propose the ID of the customer it is serving, and the decision indicates which one of those customers got the seat.

A consensus algorithm must satisfy the following properties

- Uniform agreement - No two nodes decide differently.

- Integrity - No node decides twice.

- Validity - If a node decides value v, then v was proposed by some node.

- Termination - Every node that does not crash eventually decides some value.

Consensus must be achieved for...

- leader election. In a database with single-leader replication, all nodes must agree on which node is the leader.

- atomic commits. In a database that supports transactions spanning several nodes/partitions, we have the possibility of a transaction failing on some nodes but succeeding on others. If we want to maintain transaction atomicity, we must have all nodes in agreement about the outcome of a transaction (either they all abort+rollback or commit).

Zookeeper and etcd provide consensus algorithms.

Why do it?

Key characteristics of a distributed system include Scalability, Reliability, Availability, Efficiency, and Manageability.

How does it work?

Messages may be passed between nodes of a distributed system by using technologies like HTTP, RPCs, or message queues

Historically, distributed referred to the fact that there were multiple computers all working in unison to solve some problem. Nowadays, the term is much broader, and can be used to refer to a system of multiple autonomous processes existing on a single machine. In this case, a node of the "network" would be a single process, rather than an entire computer.

- In the case of distributed computing, a node is simply something that passes messages and has its own local memory.

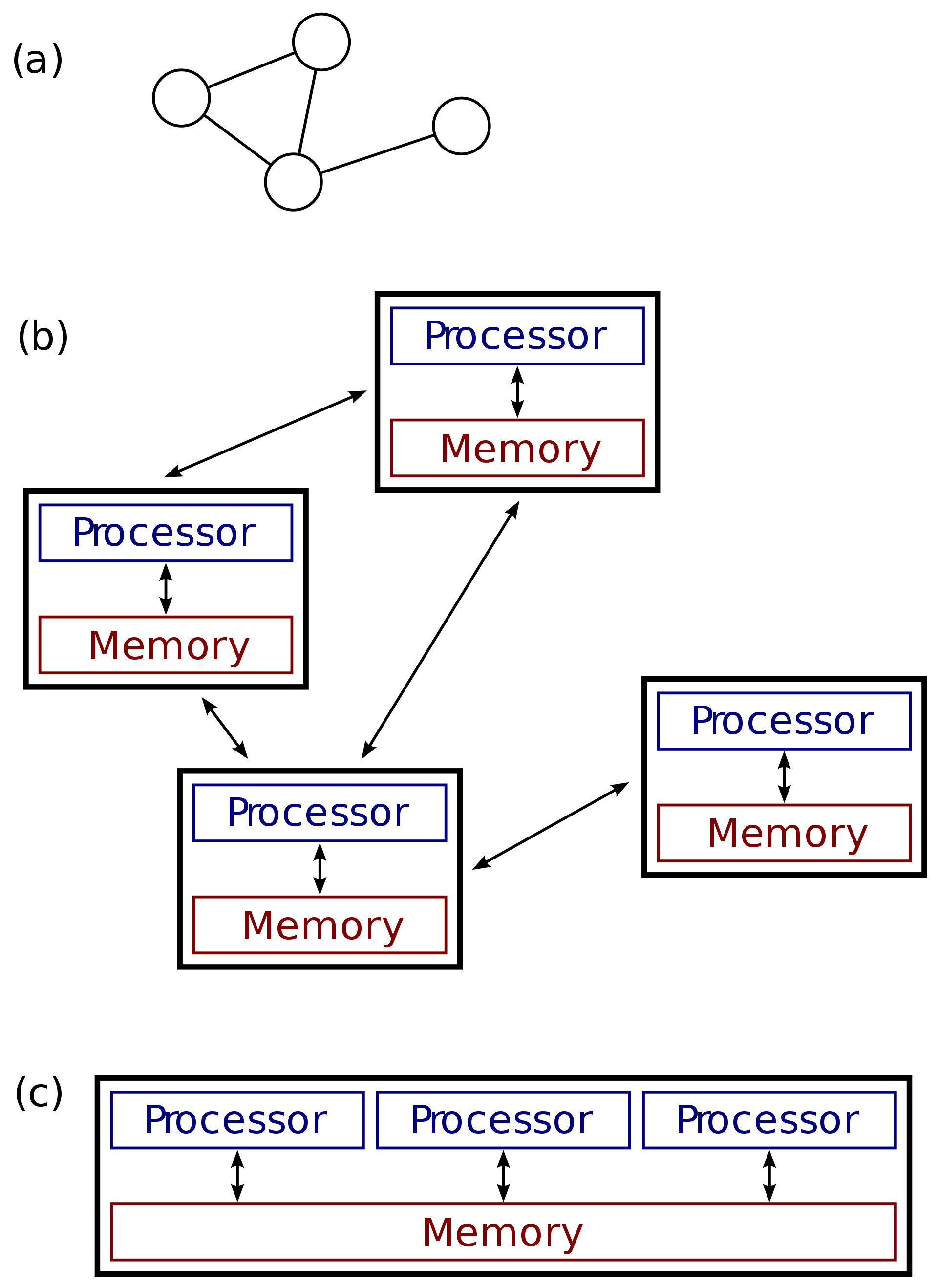

There is no clear distinction between distributed systems and parallel systems, and there is a lot of overlap, though one distinction is that the nodes of a parallel system all share the same memory, while nodes of a distributed system manage their own:

- Examples of distributed systems: MMOs, the internet itself

Considerations for distributed systems

What is it?

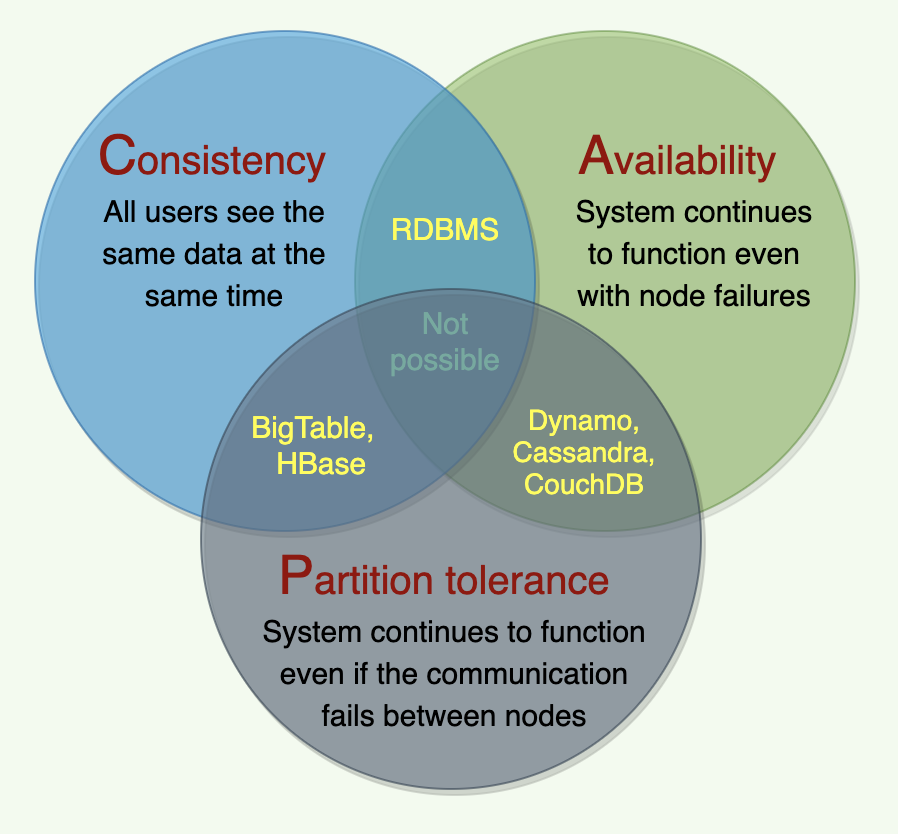

At times when the network is working correctly, a system can provide both consistency (ie. linearizability) and total availability. When a network fault occurs, you have to choose between either linearizability or total availability.

- Thus, a better way of phrasing CAP would be "when a network partition occurs, a distributed system must choose between either consistency or availability".

- A more reliable network needs to make this choice less often, but at some point the choice is inevitable.

- note: this seems to be at least somewhat of a controversial tone, given that SQL databases occupy CA. This view above would state that CA systems are incoherent, though this opinion might not appear to be reputable, given the prevalence of relational databases.

- regarding CA systems then, what CAP theorem would argue is that these systems have an inherent weakness, which is that in the case of a network partition, they will be forced to give up either consistency or availability.

CAP is sometimes presented as Consistency, Availability, Partition tolerance: pick 2 out of 3. Unfortunately, putting it this way is misleading because network partitions are a kind of fault (due to the failure of network devices), so they aren’t something about which you have a choice: they will happen whether you like it or not.

Why must it choose between availability and consistency?

The reason the system must choose is because if there is a network partition, different parts of the system may result in having different views of the data.

- If the system chooses to prioritize consistency, it will ensure that all nodes have the same view of the data, but it may sacrifice availability to all clients, since some nodes may be unavailable due to the partition.

- If the system chooses to prioritize availability, it will continue to provide service to all clients, but it may not be able to ensure that all nodes have the same view of the data, which can lead to inconsistencies.

Consistency (C)

All nodes see the same data at the same time. This means users can read or write from/to any node in the system and will receive the same data. It is equivalent to having a single up-to-date copy of the data.

Depending on the business needs of the application, we may wish to make the trade-off of sacrificing consistency for availability. Say we are implementing a facebook newsfeed. It is acceptable for the application to miss some data points here and there. For instance, if a friend of yours uploads a new post, it's not of critical importance that you get that data right away. That is, if one client is getting its data from a data store that is not up-to-date, then it's not the end of the world (assuming everything can be made up-to-date in a timely manner).

The "C" refers to only one type of consistency: linearizability

The basic idea behind linearizability is simple: to make a system appear as if there is only a single copy of the data.

linearizability is a recency guarantee on reads and writes of a single item in a database.

- In a linearizable system, as soon as one client successfully completes a write, all clients reading from the database must be able to see the value just written. Maintaining the illusion of a single copy of the data means guaranteeing that the value read is the most recent, up-to-date value, and doesn’t come from a stale cache or replica.

Consider that a unique constraint in a relational database requires linearizability

Depending on our use-case, we must decide if our system requires linearizability.

- ex. we may determine that for our flight booking application, the likelihood of two users trying to book the same seat on a flight to be remote, and thus something that we don't feel compelled to account for. In the event of this situation, we might just consider it a business expense to compensate the affected party in another way.

Availability (A)

Availability means every request received by a non-failing node in the system must result in a response. Even when severe network failures occur, every request must terminate. In simple terms, availability refers to a system’s ability to remain accessible even if one or more nodes in the system go down.

- in other words, high availability results from running in a redundant configuration on multiple machines

In an AP (Availability/Partition-tolerant) system, the system is essentially saying “I will get you to a node, but I do not know how good the data you find there will be”; or “I can be available and the data I show will be good, but not complete.”

Partition tolerance (P)

a.k.a robustness

Partition tolerance is the ability of a data processing system to continue processing data even if a network partition causes communication errors between subsystems

- A single node failure should not cause the entire system to collapse.

- A partition is a communication break (or a network failure) between any two nodes in the system, i.e., both nodes are up but cannot communicate with each other.

A partition-tolerant system continues to operate even if there are partitions (ie. communication breakdowns) in the system. Such a system can sustain any network failure that does not result in the failure of the entire network. Data is sufficiently replicated across combinations of nodes and networks to keep the system up through intermittent outages.

When to prioritize Availability or Consistency?

Go with eventual consistency if favouring availability, and strong consistency if favouring consistency

Availability

- When eventual consistency can be tolerated.

- When the system needs to remain operational and responsive even in the presence of network partitions or node failures.

- ex. real-time messaging, online gaming, social networks, CDNs, IoT systems

Consistency

- When the application demands strict data consistency and requires all nodes to have a consistent view of the data at all times.

- When data conflicts and inconsistencies can lead to significant negative consequences.

- ex. financial transactions (e.g. banking app), healthcare systems, inventory management, critical infrastructure (e.g. power grids, transportation systems), blockchain

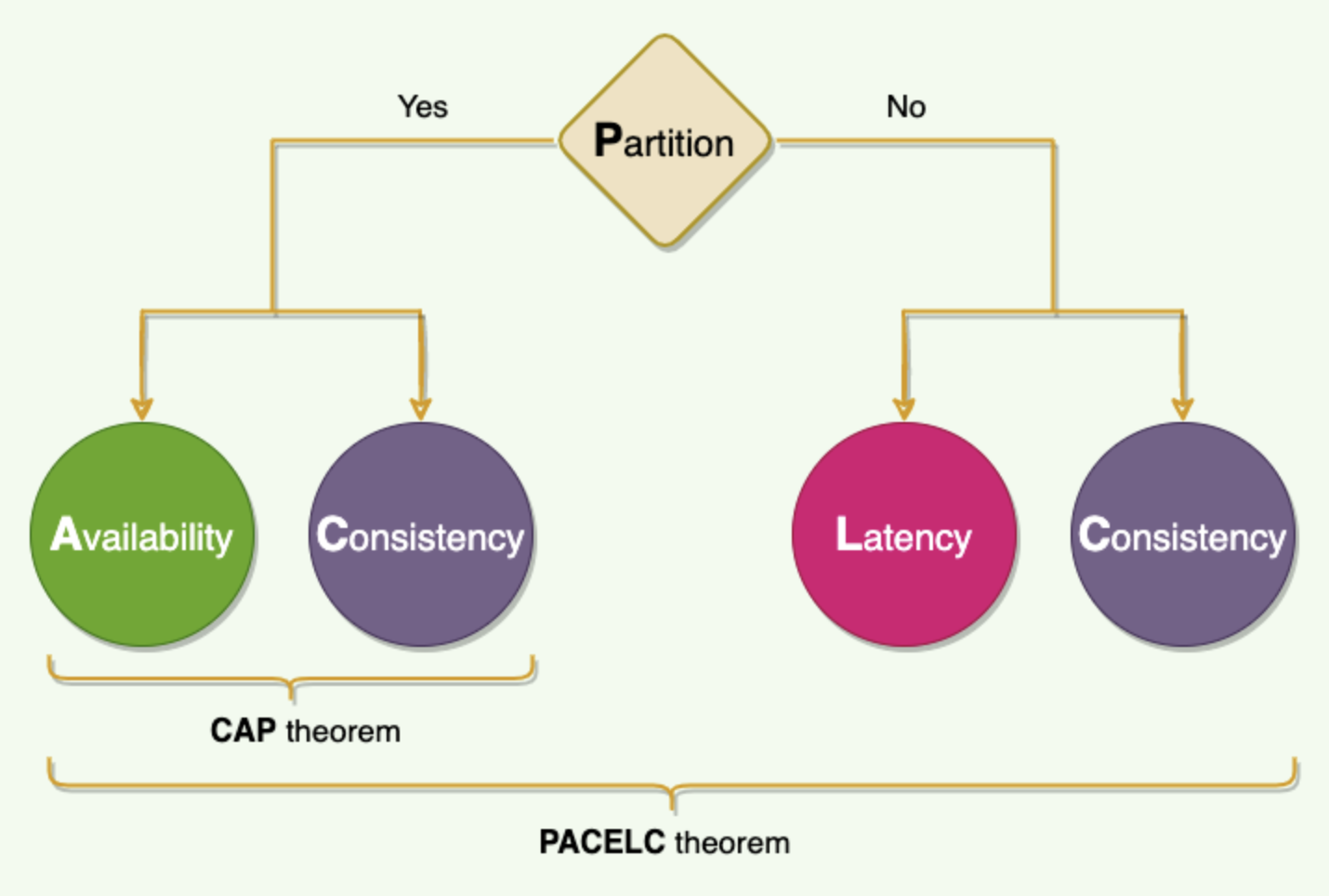

PACELC Theorem

One place where the CAP theorem is silent is what happens when there is no network partition? What choices does a distributed system have when there is no partition?

The PACELC theorem states that in a system that replicates data:

- if there is a partition (‘P’), a distributed system can tradeoff between availability and consistency (i.e., ‘A’ and ‘C’);

- else (‘E’), when the system is running normally in the absence of partitions, the system can tradeoff between latency (‘L’) and consistency (‘C’).

The first part of the theorem (PAC) is the same as the CAP theorem, and the ELC is the extension. The whole thesis is assuming we maintain high availability by replication. So, when there is a failure, CAP theorem prevails. But if not, we still have to consider the tradeoff between consistency and latency of a replicated system.

Examples

- Relational databases are CP

- CouchDB is AP

- it's Partition-Tolerant, every node is Available at all times, but it's only eventually Consistent.

- DynamoDB and Cassandra are PA/EL systems: They choose availability over consistency when a partition occurs; otherwise, they choose lower latency.

- BigTable and HBase are PC/EC systems: They will always choose consistency, giving up availability and lower latency.

- MongoDB can be considered PA/EC (default configuration): MongoDB works in a primary/secondaries configuration. In the default configuration, all writes and reads are performed on the primary. As all replication is done asynchronously (from primary to secondaries), when there is a network partition in which primary is lost or becomes isolated on the minority side, there is a chance of losing data that is unreplicated to secondaries, hence there is a loss of consistency during partitions. Therefore it can be concluded that in the case of a network partition, MongoDB chooses availability, but otherwise guarantees consistency. Alternately, when MongoDB is configured to write on majority replicas and read from the primary, it could be categorized as PC/EC.

Problems specific to distributed systems

The typical problems in a distributed system are the following:

- maintaining the system state (liveness of nodes)

- communication between nodes

The potential solutions to these problems are as follows:

- centralized state management service (eg Zookeeper, etcd)

- A centralized state management service such as Apache Zookeeper can be configured as the service discovery to keep track of the state of every node in the system

- provides a strong consistency guarantee, the primary drawbacks are the state management service becomes a single point of failure and runs into scalability problems for a large distributed system

- peer-to-peer state management service

- The peer-to-peer state management approach is inclined towards high availability and eventual consistency. The gossip protocol algorithms can be used to implement peer-to-peer state management services with high scalability and improved resilience 1.

UE Resources

- Distributed Systems in One Lesson (Kafka Guy)

- Considerations for distributed systems

- Distributed Transactions Without Atomic Clocks

Children

- CAP Theorem

- CDN

- Distributed Cache

- Fault Tolerance

- Load Balancer

- Locks

- RPC (Remote Procedure Call)

- Storage

- Strategies

Backlinks